Content-First AI Readiness Statistics: Why Language Quality Makes Or Breaks Your AI

The Content-First AI Readiness Statistics That Matter

In her Software Oasis™ B2B Executive AI Bootcamp session, Sarah Johnson argued that most AI “failures” are actually content failures in disguise. When teams ship messy, inconsistent language into their systems, AI simply scales that confusion, leading to hallucinations, conflicting answers, and avoidable rework. Her four‑block Content‑First framework—diagnose the disconnect, operationalize the framework, rewrite for impact, and test/tweak/transform—is designed to turn scattered content into infrastructure that both humans and AI can rely on.

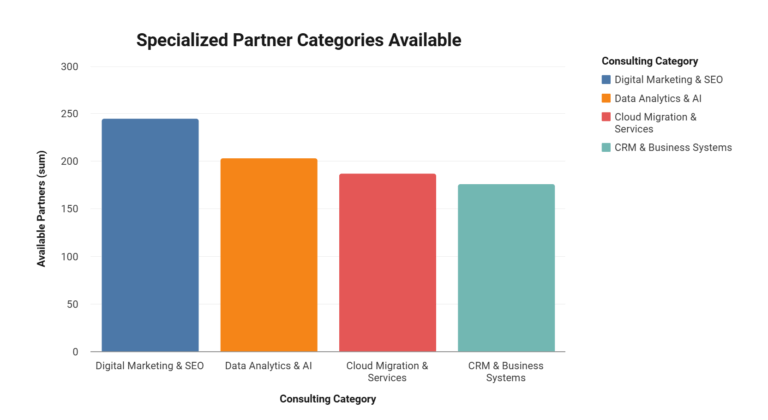

Sarah’s approach fits closely with the Software Oasis Experts article Content‑First AI Readiness, which argues that AI success is less about picking the “best” model and more about preparing your language, structures, and governance so those models have something coherent to learn from. It also connects to the broader AI and consulting benchmarks discussed in Consulting Statistics, where many organizations report stalled AI initiatives due to poor data and content foundations rather than technical limitations.

Diagnosing Message Misfires Before AI Makes Them Worse

The Cost Of Inconsistent Language

Sarah described block one, “Diagnose the disconnect,” as a four‑step process: surface root causes, identify misfire patterns, audit the current content process, and reveal the cost of inconsistency. Misalignment often shows up when different teams use different words for the same concept or the same word for different concepts, leading to a hidden fragmentation of meaning across marketing, product, UX, support, and operations. “These patterns mirror what many firms report in Consulting Statistics: AI & Automation Benchmarks, where a large share of stalled AI initiatives are tied to weak data, content, and governance foundations rather than the models themselves.”

This problem becomes acute the moment AI is introduced. She illustrated this with a “smart save” feature that is labeled one way by marketing, another way by product, another by UX, another by support, and yet another by operations. When an AI assistant is trained on all those sources, it mixes terminology and descriptions, generating answers that no team actually intended and eroding customer trust. Research on AI usability and human–AI interaction in journals such as ACM Transactions on Computer-Human Interaction and Information Systems Research similarly finds that inconsistent language and poorly aligned mental models are major drivers of user confusion and perceived AI unreliability.

AI As An Amplifier Of Meaning Systems

Sarah summarized AI readiness this way: AI is only as strong as the definitions and patterns it is fed. If teams cannot agree on core terms, actions, and concepts, AI will not fix the inconsistency—it will replicate it at scale. She argued that structured content is non‑negotiable: AI performs best when content is well‑defined, consistent, governed, and documented, and it struggles when content is scattered, duplicated, or siloed, no matter how advanced the underlying model is.

This view aligns with findings from large‑scale studies of enterprise AI deployments, where authors have noted that organizations with stronger information governance and content standards report higher satisfaction and ROI from AI projects compared with peers who treat AI as a plug‑and‑play solution. In that sense, the most important content‑first AI readiness statistics are not about model accuracy in isolation but about how often AI outputs align with shared internal definitions and user expectations.

Operationalizing Content-First For AI

Core Message Pillars And Modular Content

In block two, “Operationalize the framework,” Sarah outlined how to build a roadmap that makes content‑first thinking real. She starts by clarifying core message pillars—foundational themes, brand voice, and value propositions—and then structures a Content‑First system using modular content components that can scale across channels and experiences.

She stressed the importance of mapping content to user journeys, ensuring that each stage of the customer’s path is supported by the right explanations, reassurances, and prompts. Just as importantly, she highlighted the need to establish consistent language standards—a lexicon—that unifies messaging everywhere, which is one of the strongest defenses against AI confusion and misalignment.

Content As Infrastructure, Not Filler

Sarah framed her entire approach as treating content as infrastructure: every word has a purpose, a structure, and an impact. She contrasted this with common practice, where content is treated as last‑minute filler added after UX and systems are already defined. Studies in fields like information architecture and UX research, including empirical work reported in journals such as Journal of Information Architecture and Behaviour & Information Technology, have found that content quality and structure significantly influence task success rates, error rates, and user satisfaction—metrics that become even more critical when AI is layered on top of digital experiences.

Rewriting And Testing For AI-Ready Performance

Rewriting For Clarity, Connection, And Conversion

In block three, “Rewrite for impact,” Sarah emphasized that content‑first AI readiness is not just about tidying up language; it is about rewriting content within the framework for clarity, connection, conversion, and AI readiness. That means:

- Involving the right cross‑functional people in content creation

- Elevating clarity while preserving brand personality and tone

- Writing to engage users emotionally and drive decisions

- Outlining content so narratives align with audience needs and user strategy

Everything in this phase is user‑centered, and the result is content that both humans and AI can interpret consistently.

Testing, Tweaking, And Transforming

Block four, “Test, tweak, transform,” is where Sarah’s content‑first AI readiness statistics become concrete. She recommended:

- Running usability tests with real users to ensure clarity and impact

- Analyzing feedback to find what works and what confuses users

- Iterating with purpose—refining content iteratively to increase effectiveness

- Finalizing and activating the content‑first framework to stop misfires, drive conversion, and enable AI to scale high‑performing messaging

Her experience at TIAA offered a compelling example: by conducting extensive user research—listening to call‑center conversations, understanding fears about online financial transactions, and designing step‑by‑step processes with clear explanations for each piece of requested information—her team increased submission rates from 23% to 58% and eventually to 73%. That single set of statistics illustrates how a content‑first approach can materially change user behavior, independent of any AI.

Content-First AI Readiness In Practice

AI Readiness As Organizational Maturity

Sarah defined AI readiness as the organizational maturity to give AI what it actually needs: clear meaning, structured content, aligned teams, and a defined purpose. She argued that organizations often jump into AI without answering basic questions such as:

- What problem are we solving?

- What decision or workflow does AI improve?

- What does good look like?

Without answers, AI becomes a cost center instead of a performance engine, and governance structures—content standards, review workflows, quality checks, and guidelines on where AI is appropriate—become essential to prevent AI from introducing risk rather than value.

How This Fits With Broader AI And Consulting Statistics

The patterns Sarah described match themes that appear across consulting and academic AI research. Studies summarized in Consulting Statistics and other industry benchmarks frequently find that a large share of AI initiatives stall or underperform because foundational elements like content governance, terminology, and cross‑team alignment were never fully addressed. Academic articles in AI and information systems likewise highlight that clarity of objectives, high‑quality structured inputs, and organizational alignment are key predictors of AI project success.

Viewed together with Content‑First AI Readiness, Sarah’s framework suggests that the most important content‑first AI readiness statistics are not model parameters or token counts but:

- Submission rates, completion rates, and error rates before and after content‑first redesigns

- Consistency of terminology across departments and systems

- The reduction in rework, support tickets, and AI hallucination incidents after aligning language and structure

Get those fundamentals right, and AI becomes far more likely to amplify clarity rather than chaos.